What's Behind a Virtual Try-On Solution

Picture this: In 1995, Clueless impressed us with the computerized closet that could preview outfits. Back then, it was pure Hollywood magic — the kind of tech we’d dream about but never thought we’d actually see. Fast forward to today, and what do you know? We’ve not only matched the virtual wardrobe but made it a lot more complete.

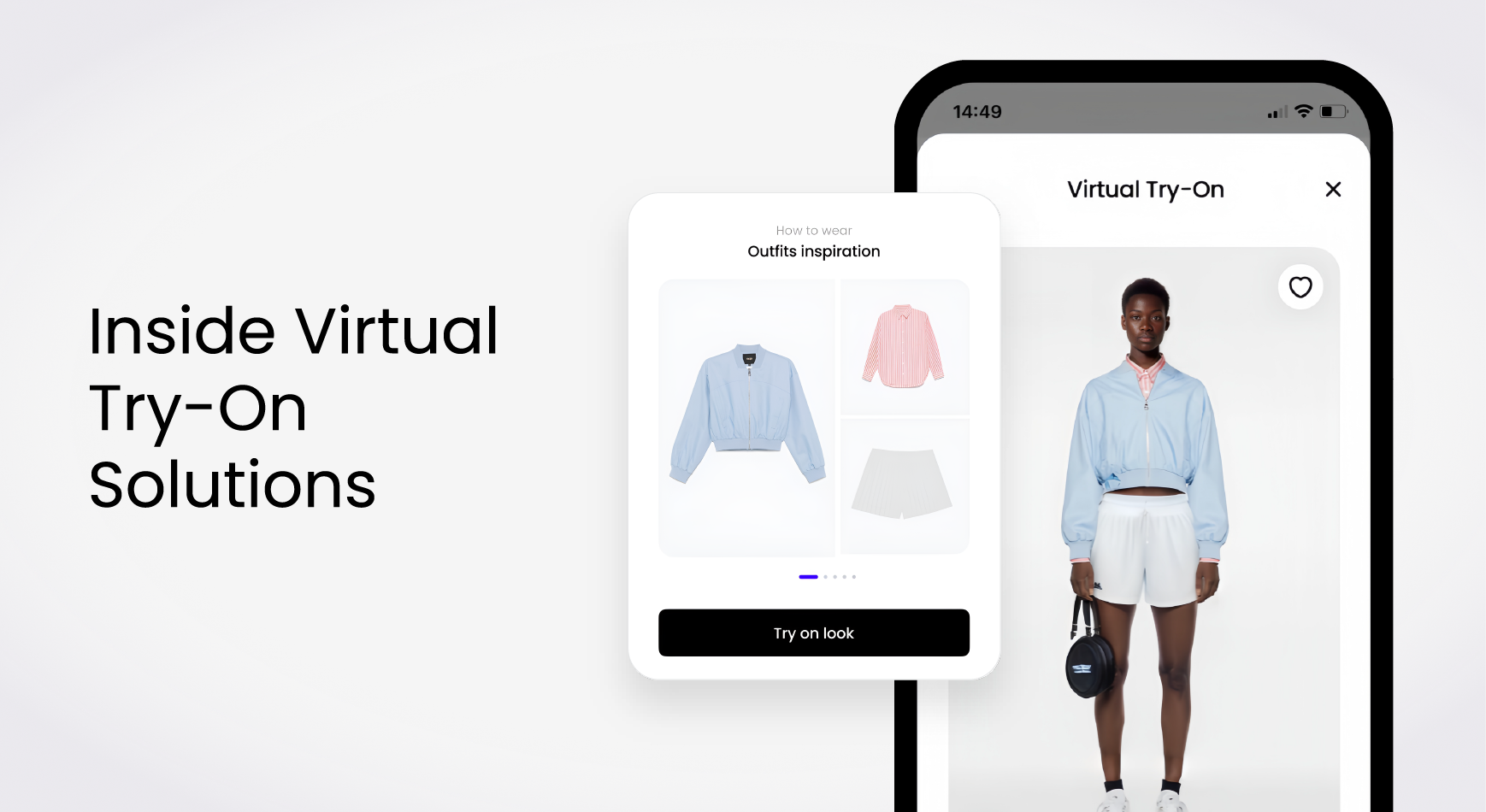

In recent years, generative AI has transformed industries, and for fashion e-commerce couldn’t be different. Now it is a reality, and a highly sophisticated one. By offering consumers the ability to “try” clothes without physically wearing them. But how does this technology actually work?

The Foundation: What Are Generative Models?

At their core, generative models are artificial intelligence systems designed to create new content based on patterns learned from existing data, generative models can produce entirely new outputs — whether that’s images, text, music, or in our case, visualizations of clothing on the human body.

When it comes to fashion applications, these models analyze diverse data containing thousands of clothing items worn by people of various shapes, sizes, and proportions. Through this analysis, the AI learns to understand critical elements like:

- How different fabrics drape on different bodies

- How colors interact with different skin tones

- How garments interact with body movement

- How textures and patterns transform when wrapped around 3D shapes

- How garments layer over one another

What makes virtual try-on particularly challenging is the importance of context. A dress doesn’t just look different on various body types — it transforms based on diverse variables. This contextual complexity is why simple overlay technologies fail to create truly convincing results.

In our constant search for innovative solutions, our team expanded on that initial idea and built our advanced technology considering a more wide aspect. As our senior data scientist, Yuri Viazovetskyi, highlighted:

Instead of relying on a single generative model, our solution uses several specialized models to parse user-specific features — like body type and skin tone — alongside detailed apparel characteristics.”

The Evolution Continues

Research in this field continues to advance rapidly. Now we’re watching clothes move and flow on our screens as they would in a physical fitting room.

At Aiuta, we’re constantly pushing the boundaries of what’s possible, as Yuri walked us through one of our proprietary technologies ASPECT, which deals with bridging the gap between real and digital:

Our body type preservation model (we call it ASPECT — short for AIUTA Shape Preservation and Enhancement Control Technology) evolved in a way that’s quite typical for machine learning systems.”

We’re constantly improving the models by training them on larger datasets and tackling more challenging cases. In parallel, we’ve begun a new research effort to integrate actual garment dimensions, bridging the gap between virtual try-on and the real fitting room experience.”

Looking Forward

As generative AI continues to evolve, we can expect virtual try-on to become increasingly accurate and personalized. For consumers, the future promises not just seeing how clothes might look, but experiencing how they might feel — creating a transformative shopping experience that bridges the gap between physical and digital retail.

Interested in seeing how AI can transform your fashion brand’s customer experience? Let’s continue this conversation.